Today’s studio diary is a look at how my brother Mike and I performed a set as Gray Acres on the Star’s End radio program for WXPN in Philadelphia, which took place from 2-3 AM on August 25. We recorded the performance and made it available for free/name your price on Bandcamp:

This is also the first time I’m attempting an audio/podcast version of a studio diary. I did a “mini interview” with Mike to get his thoughts on some elements of the performance and insight into how he achieved his guitar sounds, and paired that with my own walkthrough of my patches to demonstrate some of these sounds and techniques. There’s a lot that I had to leave on the cutting room floor, so I might post the full interview in a separate post later on. Agenda for the audio is as follows:

0:00 - 20:00 intro and interview with Mike

20:00 - 52:42 description of my setup with sound demos

52:42 - end closing remarks

Whether it’s by listening to the audio version of the diary, reading the text version here, or doing both, I hope you enjoy the extra bit of insight into this performance.

About Gray Acres

We’ve been making music as Gray Acres since 2018. It’s more “skeletal” and less defined than anything else we do musically, and was started as an alternative to the more structured arrangements of Hotel Neon and our post-rock projects before that. We made it a point to throw away everything we usually think about when we arrange and structure music, and simply try to write in the loosest way possible that allowed us plenty of room to improvise in a live setting.

To date, we’ve released 3 studio albums on the Sound in Silence, Whitelabrecs, and Archives labels - all of which are available to hear on Bandcamp. Each one of these songs took on a whole new character in the live set compared to their recorded origins.*

*Side note/observation here. If there is a common thread running through all the music I make across several aliases and projects, it’s that I view a published song as a version of the idea, to be explored and improved upon over time. I’ve never been comfortable calling anything I make “finished.” A track as heard on an album is really just a snapshot in time, or one example of how the idea can be presented. A song is a framework that can be taken into different settings and expressed or experienced in different ways. Even after it’s been published, I’m constantly hearing it differently and the song’s meaning or structure can evolve over time as it’s shaped in performances and experienced with new audiences in new places. So it is with these songs we performed.

About Star’s End

A little about the setting of this performance: since 1976, Star’s End has occupied the 5-hour chunk of time between 1-6 A.M. EST on the WXPN-FM airwaves. It is the second longest-running program of ambient music on the air today.

Chuck van Zyl, the program’s host for more than 40 years, is a force of nature. He’s been hosting this program every week for more than 40 years, all the while releasing his own Berlin School-inspired space music and, since 1992, organizing the Gatherings Concert Series in the majestic sanctuary space of 200-year-old St. Mary’s Church (the original “Ambient Church!”) for artists including Steve Roach, Stars of the Lid, Loscil, Hammock, Hans-Joachim Roedelius and many more (link to show archive here).

I’m grateful that Philadelphia’s music scene has someone like Chuck to provide opportunities for this music, and I owe a lot to his support over the years. I’ve had the good fortune of performing on Star’s End three times and the Gatherings four times with Hotel Neon, and they are some of my favorite musical experiences.

A combination of factors make Star’s End an inherently more unpredictable set than most:

The late hour: it’s a weird feeling to load in at 11:30 PM, soundcheck at 12:30 AM, and perform at 2 AM. This might not be a big deal for folks used to the culture of dance and adjacent electronic music, but for the majority of people - and especially an “ambient” musician - it isn’t typical to be playing a show in the middle of the night. There is inevitably some mind-altering sleepiness and a bit of a “rebellious” feel to the whole thing.

The studio setting: a subtle but meaningful part of the performance is knowing that you aren’t going to be walking out in front of a crowd of people, but are instead performing in a live broadcast studio. There’s nobody in the whole building aside from a janitor and a few of Chuck’s volunteer staff to witness what you’re doing, and as a result, the performance feels far more intimate and inherently “experimental.” It’s as if you’ve shown up to a friend’s house to have a jam - it immediately puts your mind into “lab mode,” and helps you consider riskier creative ideas you wouldn’t think about trying in a gallery, club, or other venue.

Knowledge of the broadcast: even if the room is empty, you still know that there are invisible recipients of your audio. It’s not a total “free for all;” there’s still some pressure to perform and realize something meaningful for the people out there listening. It’s motivating and inspiring to know that you’re reaching a global, sizable group of listeners that would otherwise never hear you play a set in person.

The setup

The basic setup of the performance was this:

I was using my iPad and laptop to re-sample and heavily process some original stems of the songs, while also playing pads comprised of orchestral instruments (strings, woodwinds, brass) and synthesizer (Moog Model D).

Mike was playing electric guitar and looping it with pedals and Ableton Live.

I was mixing all of our audio in an Ableton Live session on my laptop.

I decided to use the laptop as the centerpiece of the workflow instead of my iPad, mainly because I knew I wanted to record the set and I was mixing multiple audio sources (Mike’s guitar, my synths, and my iPad) to send to the broadcast feed. It’s far easier to use Live for a fuller session like this, and I was applying some desktop effects to the master bus that aren’t available on iPad like Oeksound’s Soothe 2 and iZotope’s Ozone. I also had a few Kontakt instruments I wanted to use - more on that setup in just a bit.

Here’s the layout in action:

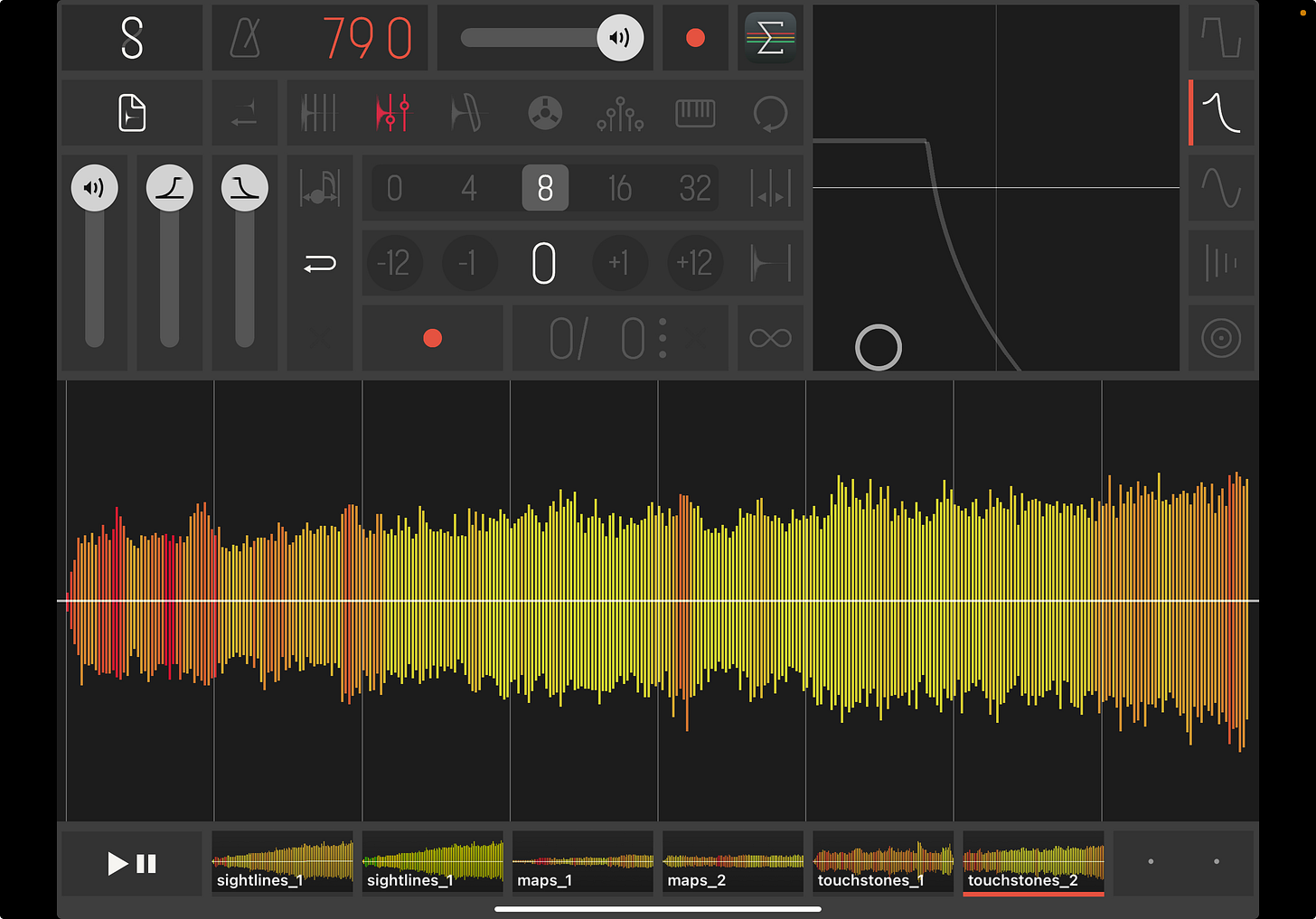

iPad session: creative re-sampling, processing, and mixing of stems

My iPad was running AUM, set up to be a performance mixer for song stems. The objective was to use backing tracks that hinted at the song we were playing, but allow myself the freedom to play them creatively and re-sample, loop, and process them in ways not heard on the original recordings. I never like to simply “press play” on any clips or tracks like this; I like to make them performative and serve as a unique sound source that changes from set to set.

Track 1: the original stems were loaded into Samplr (2 stems per song) and played manually with the “boundary” engine (or whatever it’s called) so that I could loop small pieces of them with my fingers. The output of Samplr runs into track 1 in AUM.

Track 2: The output of my Ableton Live session arrives on track 2 for processing, if I want to direct any outside audio to the iPad and have fun with it.

Tracks 3-5: Every track in AUM can be sent to any of the 3 effects busses set up (the sends are configured to be pre-fader, so I’m not dependent on the track’s level to send a signal to any bus):

Bus A: a 6-track looper using Loopy Pro as an AUv3 effect. This lets me loop as much of the Samplr audio as I want and play it back at varying speeds and pitches to blend in with the source signal.

Bus B: a “lo fi” channel with Unfiltered Audio LO FI AF, ChowDSP Chow Tape, and Audio Damage Replicant 3. All three of these plugins are set to be fairly destructive in nature, so that I can come up with a completely wrecked version of the original source audio that sounds interesting during louder sections, builds, transitions, etc.

Bus C: the “spacey” channel running Imaginando K7D Tape Delay and Toneboosters Reverb.

Note: these effects busses were sent to their own separate output channel (3/4) apart from their unaffected sources, so that if things didn’t sound that great or got too chaotic, I could simply remove those elements from the recording later and not lose the main iPad audio.

Track 6: Fugue Machine, sequencing instruments in Ableton Live. I had a unique pattern available to use for each song. I didn’t use this app much in the live sessions; I just wanted it there so that if I wanted to take my left hand off the Novation keyboard controller to do something else, I could keep the Kontakt instruments running in key and not miss a beat. The playheads are basically extra sets of hands if needed.

I also have an instance of a midi controller app called Xequence AU Keys loaded here, configured to play droning notes on the Model D bass synth in Ableton Live on a separate MIDI channel from the Novation controller.

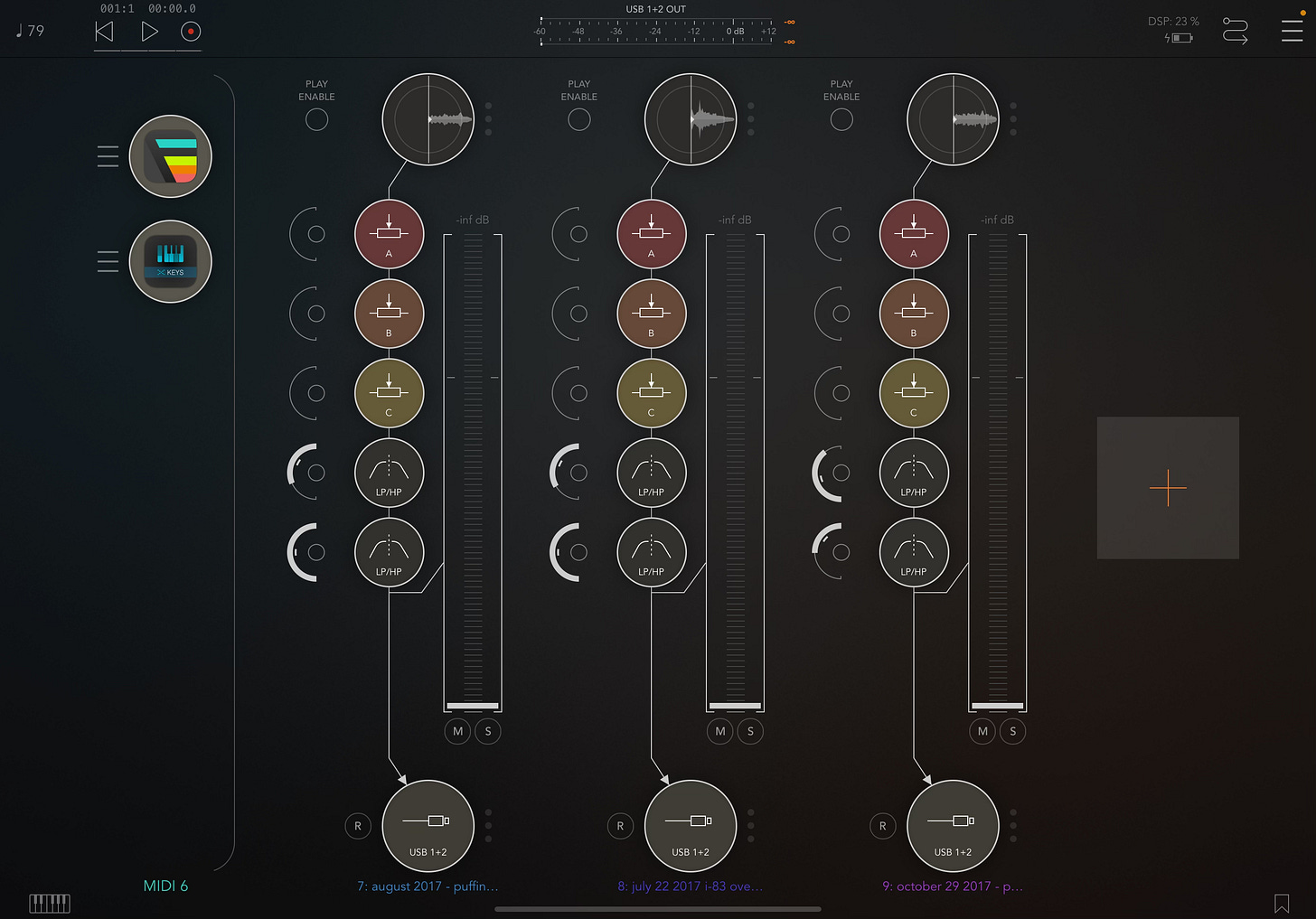

Tracks 7-9: Field recordings from 2017:

one taken outside a cliffside birdwatching hut while observing puffins in the Westman Islands of Iceland;

one underneath a highway overpass near my childhood home in Baltimore; and

one outside my old home in Philadelphia on a rainy day.

These are meant to serve as transitional audio and extra bits of texture with extreme filtering and effects bus processing; to me, they serve as a natural and human counterpoint to all the artificial and synthesized sounds swirling around. I like being able to ground a show with a small personal touch here and there - with heavy processing, field recordings can simulate things like tape hiss and mechanical effects that I love to use anyway, but with the added benefit of having memories and personal attachments.

(sound samples include filter adjustments and effects mangling)

This iPad configuration looks a lot like how I set up the Octatrack in a live scenario. To use the OT, I’d typically come prepared with a few stems, samples, or field recordings loaded and available to play on a Static Machine, set up creative effects chains to process that audio quite heavily, then loop/re-sample those results alongside selected external audio on a few different Flex Machines, blending and mixing it all together on the fly.

Here are some images of this layout in action; you can hear me talk through and demonstrate these parts in the podcast/audio version of this post.

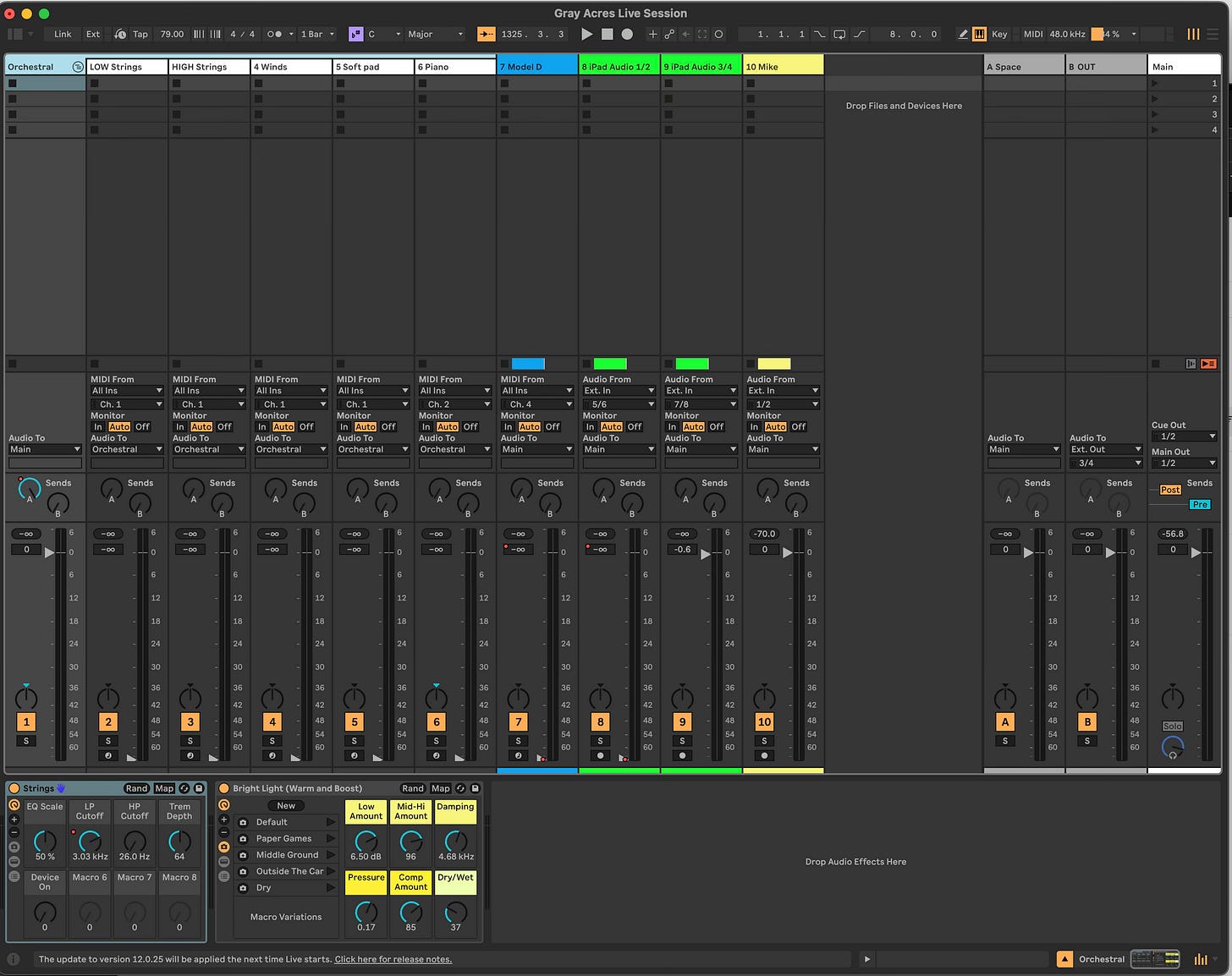

Ableton Live Session

The laptop is the command center for this performance. I’m using it to run Ableton Live and record/mix all audio from me and Mike. The master output from this session was sent to the broadcast booth to play over the air.

The "Orchestral” group contains 5 pieces:

Low string section with cello and bass from Slate & Ash Landforms,

High string section with 2 violins from Landforms,

Woodwinds and brass from Landforms,

A “soft pad” featuring violin harmonics and breathy woodwind reed sounds from Slate & Ash Auras, and

A piano from Native instruments Noire.

All of these instruments except Noire are running on MIDI Channel 1, so I can play my Novation Launchkey Mini controller to perform them simultaneously and simply mix the levels to create my droning pads. This is the real fun of the set, honestly: shaping huge walls of sound that shift and move to reveal new players, and reacting to the mix in real time.

You may wonder how I selected which sounds to use for the set, but it’s hard to describe. A lot of it is simply intuition and personal preference. I’ve spent a significant amount of time over several years learning the software in depth and creating patches that suit my playing style. I’m always searching for specific textural elements in each of my patches that can cut through a dense set like this and offer some new place to visit: harmonics that pierce through a mix, breathy textures that add interest, that kind of thing. I try to be conscious of the frequency ranges I want each layer to occupy and make sure that each part brings something new to the table. I also try to take full advantage of the modulation capabilities in these instruments so that I can radically alter the sound with as simple a gesture as possible (a mod wheel adjustment, for instance) and make it easy to add variation and motion over time. Landforms is an immense piece of software, as is Auras and Noire, and there are many ways to modulate and shape the sounds within.

Here’s what the Ableton Live session looks like:

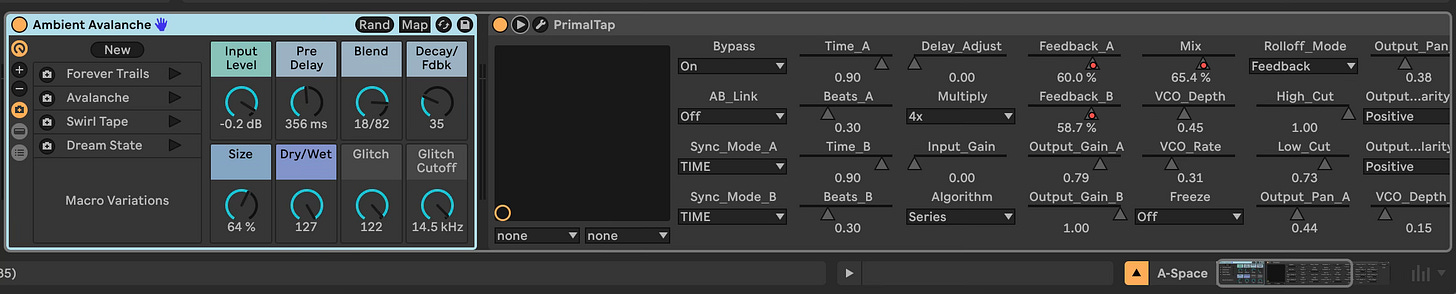

Notice Send A to the right, labeled “Space.” This channel contains an audio effects rack with extremely high feedback delay and lush reverb, as well as Soundtoys Primal Tap delay set to play back extremely degraded, lo-fi repeats. I’m selectively sending bits and pieces of audio there to create even more spacious atmospheres and pads throughout the set. I can control Mix, Feedback, and Decay times of all these effects with macro knobs.

Send B, labeled “Out,” is where I direct audio if I want it to arrive on the iPad as input 7/8 over there. This is nice when I want to sample, loop, or process individual audio streams on the laptop rather than the combined mix.

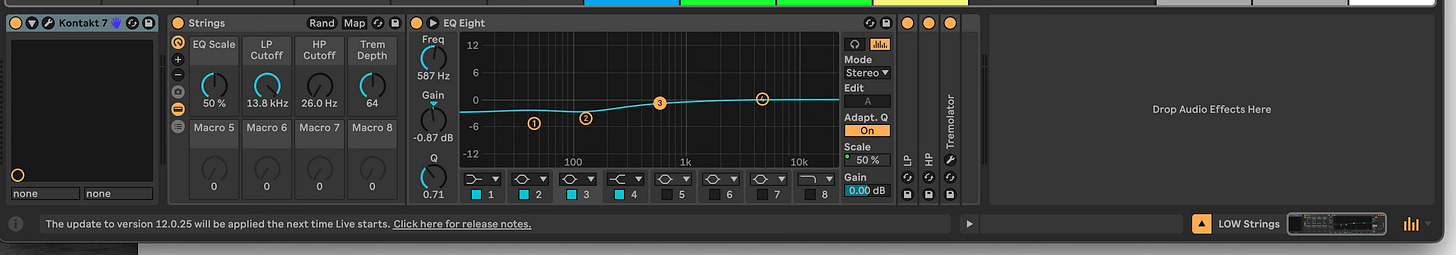

Also, it’s worth nothing that I set up the same basic effects rack on every channel for quick and easy tone shaping and mix placement. The rack has 4 effects:

EQ with a macro to adjust Gain Scaling,

Low-pass filter with macro to adjust cutoff frequency,

High-pass filter with cutoff macro, and

Soundtoys Tremolator with slow, 16-bar ramps at various divisions of the master tempo. This makes it easy to fade each part in and out of the mix over time and add variability/subtle movement. There’s a macro knob to control depth of this effect, so it can be turned off completely or increased as needed. The default is 50% depth on every track - I find that this helps parts ebb and flow naturally and prevents them from becoming too fatiguing on the ear in a dense, drone-heavy set like this.

Mike’s Guitar

The final piece to the puzzle is the guitar that Mike adds throughout. His sound starts with the EHX 720 looper and Strymon El Capistan, which send delay-drenched loops into Ableton Live for additional processing and effects from plugins by Valhalla DSP, Eventide, and a few others.

Much like I’m doing with the iPad, he has 3-4 effects busses configured in advance so that he can mix and route his source audio (his pedalboard’s output) to a variety of different effects chains for looping, pitch-shifting, and additional spaciousness, then focus his attention on creative blending and mixing of these transformations. He uses an Akai MIDI Mix controller to fade in varying amounts of these processed sounds.

Mike has always taken a minimalist approach to the musical projects he participates in, including Hotel Neon and his own solo endeavor, Transient Sounds. This set was no different. You can hear him elaborate on his desire for simplicity and “uncluttered enjoyment of the guitar” in the podcast.

In closing

We had a great time playing this set. It’s nice to have an opportunity like this to explore new territory in a comfortable environment, and we certainly learned a few new things about how we could potentially take these songs to other venues in the future…more live performances would be a wonderful thing and is something we sincerely hope to do next year. First, we have a few gigabytes of unfinished studio recordings that we aim to complete in the next few months. I’ll be sure to share more about that as the mixing process continues.

Thanks, as always, for reading Sound Methods. If you enjoy what you read and hear, I’d be very grateful to have you subscribe and share the publication. See you next time!